Using terminal in data science is actually very useful and fast to do some exploratory data analysis. You may be working with a data set that is needs to be cleaned and is too large to open easily in pandas. Its a good way to get a sense of what the data set is as well before you begin the the cleaning stage of it. This tutorial will walk you through a few great commands to help you work on your commandline skills.

A good reason to familiarize yourself with terminal or commandline is to get better at writing simple shell scripts and running bash. Also, it is a good way to begin moving away from just writing code predominately in the beautiful world of Jupyter. Understanding a few commands to run some simple data analysis in bash will help you speed up your process and not always have to rely on an engineer to produce the data in some kind of manageable way. I can sometimes become impatient waiting for a data set. Also, maybe the data set is not very clean. Maybe you need to parse through log files, txt files that have not been formatted, or IoT data.

We will be looking at Federal Surviellance Planes data provided by Buzzfeed. cURL is a great command to get to know. It is used often to download data from FTP sites, webpages, and make API calls.

mkdir ds_commandline

cd ds_commandline

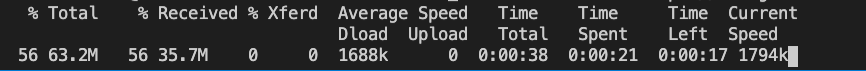

curl -O https://raw.githubusercontent.com/BuzzFeedNews/2016-04-federal-surveillance- planes/master/data/feds/feds1.csv

The following commands created a folder called "ds_commandline" then you changed directories into it with "cd" and then finally used cURL to download the file.

Show a list of items in a directory.

ls -l

What am I seeing? ls command show the contents of a directory and adding -l is the long form to show metadata about those contents.

Copying a file

cp filename new_filename

Moving a file

mv folder/filename folder/filename

Read a File

cat filename.txt

Show path

pwd

```bash wc -l feds1.csv

``` You should get a result of 397,041 rows.

Let's try to do more profiling of the data.

How many columns are there?

head -1 feds1.csv | sed 's/[^,]//g' | wc -c

This command will remove all the characters other than the commas from the first line to give us a count of the number of columns.

What are the names of all the columns?

head -1 feds1.csv

What are the number of flights taken in this data set?

cut -f2 feds1.csv | sort | uniq | wc -2

This command looks at the second column which is flight_id returns unique count of all values.

touch quick_analysis.sh

nano quick_analysis.sh

Use either nano or vim to edit the shell script.